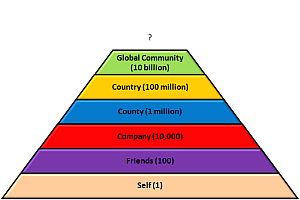

We are all a small piece of a complex system that extends well beyond the boundaries of our individual experience.

We are all a small piece of a complex system that extends well beyond the boundaries of our individual experience.

We all know this.

We also know that seeing the big picture is very helpful because it gives us context, meaning and leads to better decisions more effective actions.

We feel better when we know where we fit into the Big Picture – and we feel miserable when we do not.

And when our system is not working as well as we would like then we need to improve it; and to do that we need to understand how it works so that we only change what we need to.

To do that we need to see the Big Picture and to understand it.

So how do we build the Big Picture from the Small Bits?

Solving a jigsaw puzzle is a good metaphor for the collective challenge we face. Each of us holds a piece which we know very well because it is what we see, hear, touch, smell and taste every day. But how do we assemble the pieces so that we can all clearly see and appreciate the whole rather than dimly perceive a dysfunctional heap of bits?

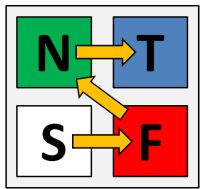

One strategy is to look for tell-tale features that indicate where a piece might fit – irrespective of the unique picture on it. Such as the four corners.

One strategy is to look for tell-tale features that indicate where a piece might fit – irrespective of the unique picture on it. Such as the four corners.

We also use this method to group pieces that belong on the sides – but this is not enough to tell us which side and where on which side each piece fits.

So far all we have are some groups of bits – rough parts of the whole – but no clear view of the picture. To see that we need to look at the detail – the uniqueness of each piece.

Our next strategy is to look at the shapes of the edges to find the pieces that are complementary – that leave no gaps when fitted together. These are our potential neighbours. Sometimes there is only one bit that fits, sometimes there are many that fit well enough.

Our third strategy is to look at the patterns on the potential neighbours and to check for continuity because the picture should flow across the boundary – and a mismatch means we have made an error.

What we have now is the edges of the picture and a heap of bits that go somewhere in the middle.

What we have now is the edges of the picture and a heap of bits that go somewhere in the middle.

By connecting the edge-pieces we can see that there are gaps and this is an important insight.

It is not until we have a framework that spans the whole picture that the gaps become obvious.

But we do not know yet if our missing pieces are in the heap or not – we will not know that until we have solved the jigsaw puzzle.

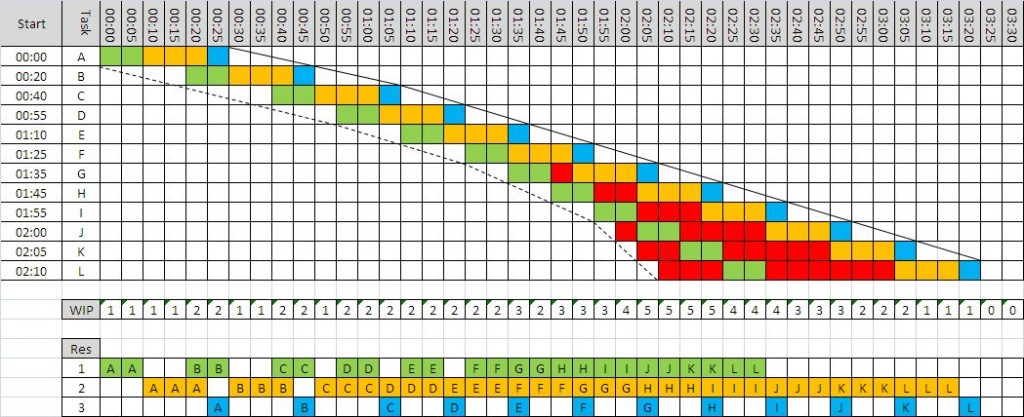

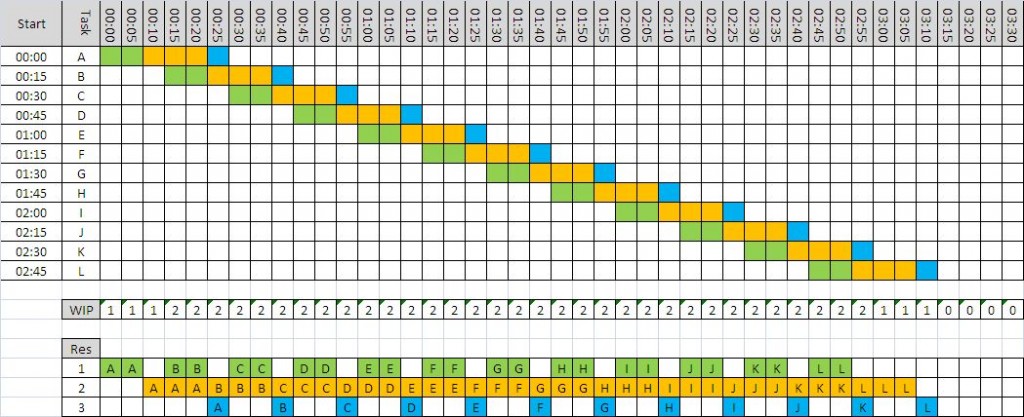

Throughout the problem-dissolving process we are using three levels of content:

Data that we gain through our senses, in this case our visual system;

Information which is the result of using context to classify the data – shape and colour for example; and

Knowlege which we derive from past experience to help us make decisions – “That is a top-left corner so it goes there; that is an edge so it goes in that group; that edge matches that one so they might be neighbours and I will try fitting them together; the picture does not flow so they cannot be neighbours and I must separate them”.

The important point is that we do not need to Understand the picture to do this – we can just use “dumb” pattern-matching techniques, simple logic and brute force to decide which bits go together and which do not. A computer could do it – and we or the computer can solve the puzzle and still not recognise what we are looking at, understand what it means, or be able to make a wise decision.

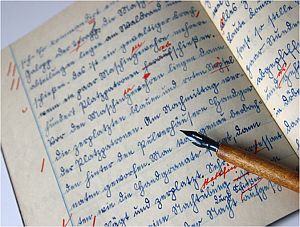

To do that we need to search for meaning – and that usually means looking for and recognising symbols that are labels for concepts and using the picture to reveal how they relate to each other.

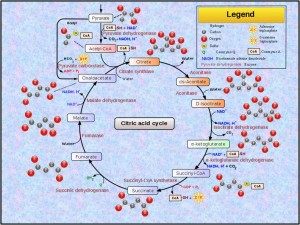

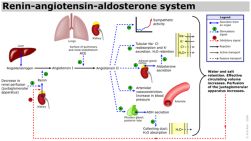

As we fit the neighbours together we see words and phrases that we may recognise – “Legend” and “cycle” for example (click the picture to enlarge) – and we can use these labels to start to build a conceptual framework, and from that we create an expectation. Just as we did with the corners and edges.

As we fit the neighbours together we see words and phrases that we may recognise – “Legend” and “cycle” for example (click the picture to enlarge) – and we can use these labels to start to build a conceptual framework, and from that we create an expectation. Just as we did with the corners and edges.

The word “cycle” implies a circle, which is often drawn as a curved line, so we can use this expectation to look for pieces of a circle and lay them out – just as we did with the edges.

We may not recognise all the symbols – “citric acid” for example – and that finding means that there is new knowledge hidden in the picture. By the end we may understand what those new symbols mean from the context that the Big Picture creates.

By searching for meaning we are doing more than mechanically completing a task – we are learning, expanding our knowledge and deepening our understanding.

But to do this we need to separate the heap of bits so they do not obscure each other and so we can see each clearly. When it is a mess the new learning and deeper understanding will elude us.

We have now found some pieces with lines on that look like parts of a circle, so we can arrange them into an approximate sequence – and when we do that we are delighted to find that the pieces fit together, the pictures flow from one to the other, and there is a sense of order and structure starting to emerge from within the picture itself.

Until now the only structure we saw was the artificial and meaningless boundary. We now see a new and unfamiliar phrase “citric acid cycle” – what is that? Our curiosity is building.

As we progress we find repeated symbols that we now recognise but do not understand – red and gray circles linked together. In the top right under the word “Legend” we see the same symbols together with some we do recognise – “hydrogen, carbon and oxygen”.

As we progress we find repeated symbols that we now recognise but do not understand – red and gray circles linked together. In the top right under the word “Legend” we see the same symbols together with some we do recognise – “hydrogen, carbon and oxygen”.

Ah ha! Now we can translate the unfamiliar symbols into familiar concepts, and now we suspect that this is something to do with chemistry. But what?

We are nearly there. Almost all the pieces are in place and we have identified where the last few fit.

We are nearly there. Almost all the pieces are in place and we have identified where the last few fit.

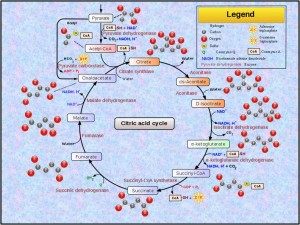

Now we can see that all the pieces are from the same jigsaw, there are none missing and there are no damaged, distorted, or duplicated pieces. The Big Picture looks complete.

We can see that the lines between the pieces are not part of the picture – they are artificial boundaries created when the picture was broken into parts – and useful only for helping us to re-assemble the big picture.

Now they are getting in the way – they are distracting us from seeing the picture as clearly as we could – so we can dispense with them – they have served their purpose.

We can also see that the pieces appear to be arranged in columns and rows – and we could view our picture as a set of interlocked vertical stripes or as a set of interlocked horizontal strips – but that this is an artificial structure created by our artificial boundaries. The picture we are seeing transcends our artificial linear decomposition.

We erase all the artificial boundaries and the full picture emerges.

We erase all the artificial boundaries and the full picture emerges.

Now we can see that we have a chemical system where a series of reactions are linked in a cycle – and we can see something called pyruvate coming in top left and we recognise the symbols water and CO2 and we conclude that this might be part of the complex biochemical system that is called cellular respiration – the process by which the food that we eat and the oxygen we breathe is converted into energy and the CO2 that we breathe out.

Wow!

And we can see that this is just part of a bigger map – the edges were also artificial and arbitrary! But where does the oxygen fit? And which bit is the energy? And what is the link between the carbohydrate that we eat and this new thing called pyruvate?

Our bigger picture and deeper understanding has generated a lot of new questions, there is so much more to explore, to learn and to understand!!

Let us stop and reflect. What have we learned?

We have learned that our piece was not just one of a random heap of unconnected jigsaw bits; we have learned where our piece fits into a Bigger Picture; we have learned how our piece is an essential part of that picture; we have learned that there is a design in the picture and we have learned how we are part of that design.

And when we all know and we all understand the whole design and how it works then we all have a much better chance of being able to improve it in a rational, sensible, explainable and actionable way.

Building the System Picture from the disorganised heap of Step Parts is one of the key skills of an Improvement Science Practitioner.

And the more practice we get, the quicker we recognise what we are looking at – because there are a relatively few effective system designs.

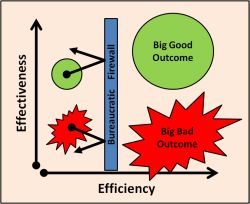

This is insight is important because most of the unsolved problems are system problems – and the sooner we can diagnose the system design flaws that are the root causes of the system problems, then the sooner we can propose, test and implement solutions and experience the expected improvements.

That is a Win-Win-Win strategy.

That is systems engineering in a nutshell.

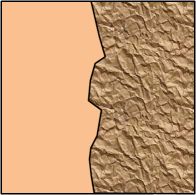

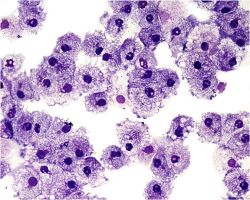

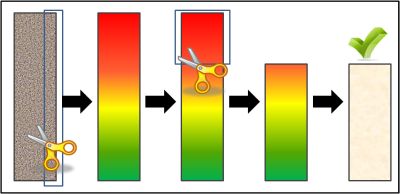

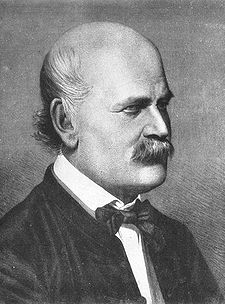

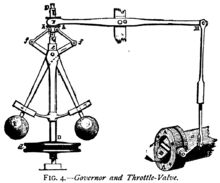

The human body is an amazing self-repairing system. It does this by being able to detect damage and to repair just the damaged part while still continuing to function. One visible example of this is how it repairs a broken bone. The skeleton is the hard, jointed framework that protects and supports the soft bits. Some of the soft bits, the muscles, both stablise and move this framework of bones. Together they form the musculoskeletal system that gives us the power to move ourselves. So when, by accident, we break a bone how do we repair the damage? The secret is in the microscopic structure of the bone. Bone is not like concrete, solid and inert, it is a living tissue. Two of the microsopic cells that live in the bone are the osteoclasts and the osteoblasts (osteo- is Greek for “bone”; -clast is Greek for “break” and -blast is Greek for “germ” in the sense of something that grows). Osteoclasts dissolve the old bone and osteoblasts deposit new bone – so when they work together they can create bone, remodel bone, and repair bone. It is humbling when we consider that millions of microscopic cells are able to coordinate this continuous, dynamic, adaptive, reparative behaviour with no central command-and-control system, no decision makers, no designers, no blue-prints, no project managers. How is this biological miracle achieved? We are not sure – but we know that there must be a process.

The human body is an amazing self-repairing system. It does this by being able to detect damage and to repair just the damaged part while still continuing to function. One visible example of this is how it repairs a broken bone. The skeleton is the hard, jointed framework that protects and supports the soft bits. Some of the soft bits, the muscles, both stablise and move this framework of bones. Together they form the musculoskeletal system that gives us the power to move ourselves. So when, by accident, we break a bone how do we repair the damage? The secret is in the microscopic structure of the bone. Bone is not like concrete, solid and inert, it is a living tissue. Two of the microsopic cells that live in the bone are the osteoclasts and the osteoblasts (osteo- is Greek for “bone”; -clast is Greek for “break” and -blast is Greek for “germ” in the sense of something that grows). Osteoclasts dissolve the old bone and osteoblasts deposit new bone – so when they work together they can create bone, remodel bone, and repair bone. It is humbling when we consider that millions of microscopic cells are able to coordinate this continuous, dynamic, adaptive, reparative behaviour with no central command-and-control system, no decision makers, no designers, no blue-prints, no project managers. How is this biological miracle achieved? We are not sure – but we know that there must be a process.![]() There are people within organisations that have the same role as the osteoblast in the body. These people are called iconoclasts and what they do is dissolve dogma. They break up the rigid rules and regulations that create the corporate equivalent of concrete – but they are selective. Iconoclasts are sensitive to stress and to strain and they only dissolve the cultural concrete where it is getting in the way of improvement. That is where dogma is blocking innovation. Iconoclasts question the status quo, and at the same time explain how it is causing a problem, offer alternatives, and predict the benefits of the innovation. Iconoclasts are not skeptics or cynics – they prepare the ground for change – they are facilitators.

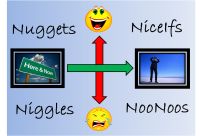

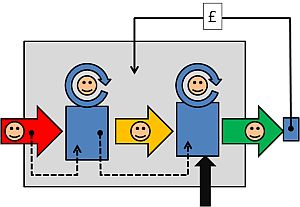

There are people within organisations that have the same role as the osteoblast in the body. These people are called iconoclasts and what they do is dissolve dogma. They break up the rigid rules and regulations that create the corporate equivalent of concrete – but they are selective. Iconoclasts are sensitive to stress and to strain and they only dissolve the cultural concrete where it is getting in the way of improvement. That is where dogma is blocking innovation. Iconoclasts question the status quo, and at the same time explain how it is causing a problem, offer alternatives, and predict the benefits of the innovation. Iconoclasts are not skeptics or cynics – they prepare the ground for change – they are facilitators. Improvement Scientists are like builders – they use the raw materials of ideas, experience, knowledge, understanding, creativity and enthusiasm and assemble them into new organisational structures. In doing so they fully accept that one day these structures will in turn be dismantled and rebuilt. That is the way of improvement. The dogma is relative and temporary rather than absolute and permanent. And the faster the structures can be disassembled and reassembled the more agile the organisation becomes and the more able it is to survive change.

Improvement Scientists are like builders – they use the raw materials of ideas, experience, knowledge, understanding, creativity and enthusiasm and assemble them into new organisational structures. In doing so they fully accept that one day these structures will in turn be dismantled and rebuilt. That is the way of improvement. The dogma is relative and temporary rather than absolute and permanent. And the faster the structures can be disassembled and reassembled the more agile the organisation becomes and the more able it is to survive change.