Improvement Science is not just about removing the barriers that block improvement and building barriers to prevent deterioration – it is also about maintaining acceptable, stable and predictable performance.

Improvement Science is not just about removing the barriers that block improvement and building barriers to prevent deterioration – it is also about maintaining acceptable, stable and predictable performance.

In fact most of the time this is what we need our systems to do so that we can focus our attention on the areas for improvement rather than running around keeping all the plates spinning. Improving the ability of a system to maintain itself is a worthwhile and necessary objective.

Long term stability cannot be achieved by assuming a stable context and creating a rigid solution because the World is always changing. Long term stability is achieved by creating resilient solutions that can adjust their behaviour, within limits, to their ever-changing context.

This self-adjusting behaviour of a system is called homeostasis.

The foundation for the concept of homeostasis was first proposed by Claude Bernard (1813-1878) who unlike most of his contemporaries, believed that all living creatures were bound by the same physical laws as inanimate matter. In his words: “La fixité du milieu intérieur est la condition d’une vie libre et indépendante” (“The constancy of the internal environment is the condition for a free and independent life”).

The term homeostasis is attributed to Walter Bradford Cannon (1871 – 1945) who was a professor of physiology at Harvard medical school and who popularized his theories in a book called The Wisdom of the Body (1932). Cannon described four principles of homeostasis:

- Constancy in an open system requires mechanisms that act to maintain this constancy.

- Steady-state conditions require that any tendency toward change automatically meets with factors that resist change.

- The regulating system that determines the homeostatic state consists of a number of cooperating mechanisms acting simultaneously or successively.

- Homeostasis does not occur by chance, but is the result of organised self-government.

Homeostasis is therefore an emergent behaviour of a system and is the result of organised, cooperating, automatic mechanisms. We know this by another name – feedback control – which is passing data from one part of a system to guide the actions of another part. Any system that does not have homeostatic feedback loops as part of its design will be inherently unstable – especially in a changing environment. And unstable means untrustworthy.

Take driving for example. Our vehicle and its trusting passengers want to get to their desired destination on time and in one piece. To achieve this we will need to keep our vehicle within the boundaries of the road – the white lines – in order to avoid “disappointment”.

Take driving for example. Our vehicle and its trusting passengers want to get to their desired destination on time and in one piece. To achieve this we will need to keep our vehicle within the boundaries of the road – the white lines – in order to avoid “disappointment”.

As their trusted driver our feedback loop consists of a view of the road ahead via the front windscreen; our vision connected through a working nervous system to the muscles in ours arms and legs; to the steering wheel, accelerator and brakes; then to the engine, transmission, wheels and tyres and finally to the road underneath the wheels. It is quite a complicated multi-step feedback system – but an effective one. The road can change direction and unpredictable things can happen and we can adapt, adjust and remain in control. An inferior feedback design would be to use only the rear-view mirror and to steer by looking at the whites lines emerging from behind us. This design is just as complicated but it is much less effective and much less safe because it is entirely reactive. We get no early warning of what we are approaching. So, any system that uses the output performance as the feedback loop to the input decision step is like driving with just a rear view mirror. Complex, expensive, unstable, ineffective and unsafe.

As the number of steps in a process increases the more important the design of the feedback stabilisation becomes – as does the number of ways we can get it wrong: Wrong feedback signal, or from the wrong place, or to the wrong place, or at the wrong time, or with the wrong interpretation – any of which result in the wrong decision, the wrong action and the wrong outcome. Getting it right means getting all of it right all of the time – not just some of it right some of the time. We can’t leave it to chance – we have to design it to work.

Let us consider a real example. The NHS 18-week performance requirement.

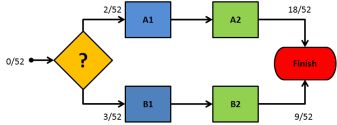

The stream map shows a simple system with two parallel streams: A and B that each has two steps 1 and 2. A typical example would be generic referral of patients for investigations and treatment to one of a number of consultants who offer that service. The two streams do the same thing so the first step of the system is to decide which way to direct new tasks – to Step A1 or to Step B1. The whole system is required to deliver completed tasks in less than 18 weeks (18/52) – irrespective of which stream we direct work into. What feedback data do we use to decide where to direct the next referral?

The stream map shows a simple system with two parallel streams: A and B that each has two steps 1 and 2. A typical example would be generic referral of patients for investigations and treatment to one of a number of consultants who offer that service. The two streams do the same thing so the first step of the system is to decide which way to direct new tasks – to Step A1 or to Step B1. The whole system is required to deliver completed tasks in less than 18 weeks (18/52) – irrespective of which stream we direct work into. What feedback data do we use to decide where to direct the next referral?

The do nothing option is to just allocate work without using any feedback. We might do that randomly, alternately or by some other means that are independent of the system. This is called a push design and is equivalent to driving with your eyes shut but relying on hope and luck for a favourable outcome. We will know when we have got it wrong – but it is too late then – we have crashed the system!

A more plausible option is to use the waiting time for the first step as the feedback signal – streaming work to the first step with the shortest waiting time. This makes sense because the time waiting for the first step is part of the lead time for the whole stream so minimising this first wait feels reasonable – and it is – BUT only in one situation: when the first steps are the constraint steps in both streams [the constraint step is one one that defines the maximum stream flow]. If this condition is not met then we heading for trouble and the map above illustrates why. In this case Stream A is just failing the 18-week performance target but because the waiting time for Step A1 is the shorter we would continue to load more work onto the failing stream – and literally push it over the edge. In contrast Stream B is not failing and because the waiting time for Step B1 is the longer it is not being overloaded – it may even be underloaded. So this “plausible” feedback design can actually make the system less stable. Oops!

In our transport metaphor – this is like driving too fast at night or in fog – only being able to see what is immediately ahead – and then braking and swerving to get around corners when they “suddenly” appear and running off the road unintentionally! Dangerous and expensive.

With this new insight we might now reasonably suggest using the actual output performance to decide which way to direct new work – but this is back to driving by watching the rear-view mirror! So what is the answer?

The solution is to design the system to use the most appropriate feedback signal to guide the streaming decision. That feedback signal needs to be forward looking, responsive and to lead to stable and equitable performance of the whole system – and it may orginate from inside the system. The diagram above holds the hint: the predicted waiting time for the second step would be a better choice. Please note that I said the predicted waiting time – which is estimated when the task leaves Step 1 and joins the back of the queue between Step 1 and Step 2. It is not the actual time the most recent task came off the queue: that is rear-view mirror gazing again.

When driving we look as far ahead as we can, for what we are heading towards, and we combine that feedback with our present speed to predict how much time we have before we need to slow down, when to turn, in which direction, by how much, and for how long. With effective feedback we can behave proactively, avoid surprises, and eliminate sudden braking and swerving! Our passengers will have a more comfortable ride and are more likely to survive the journey! And the better we can do all that the faster we can travel in both comfort and safety – even on an unfamiliar road. It may be less exciting but excitement is not our objective. On time delivery is our goal.

Excitement comes from anticipating improvement – maintaining what we have already improved is rewarding. We need both to sustain us and to free us to focus on the improvement work!