One of the really, really cool things about the 1.3 kg of “ChimpWare” between our ears is the way it learns.

One of the really, really cool things about the 1.3 kg of “ChimpWare” between our ears is the way it learns.

We have evolved the ability to predict the likely near-future based on just a small number of past experiences.

And we do that by creating stored mental models.

Not even the most powerful computers can do it as well as we do – and we do it without thinking. Literally. It is an unconscious process.

This ability to pro-gnose (=before-know) gave our ancestors a major survival advantage when we were wandering about on the savanna over 10 million years ago. And we have used this amazing ability to build societies, mega-cities and spaceships.

But this capability is not perfect. It has a flaw. Our “ChimpOS” does not store a picture of reality like a digital camera; it stores a patchy and distorted perception of reality, and then fills in the gaps with guesses (i.e. gaffes). And we do not notice – consciously.

The cognitive trap is set and sits waiting to be sprung. And to trip us up.

Here is an example:

“Improvement implies change”

Yes. That is a valid statement because we can show that whenever improvement has been the effect, then some time before that a change happened. And we can show that when there are no changes, the system continues to behave as it always has. Status quo.

The cognitive trap is that our ChimpOS is very good at remembering temporal associations – for example an association between “improvement” and “change” because we remember in the present. So, if two concepts are presented at the same time, and we spice-the-pie with a bit of strong emotion, then we are more likely to associate them. Which is OK.

The problem comes when we play back the memory … it can come back as …

“change implies improvement” which is not valid. And we do not notice.

To prove it is not valid we just need to find one example where a change led to a deterioration; an unintended negative consequence, a surprising, confusing and disappointing failure to achieve our intended improvement.

An embarrassing gap between our intent and our impact.

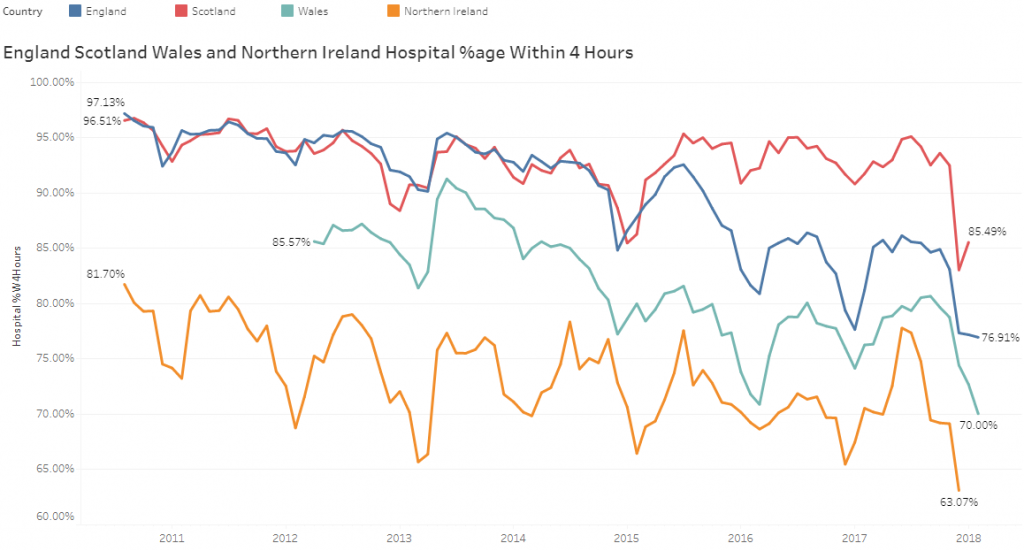

And finding that evidence is not hard. Failures and disappointments in the world of improvement are all too common.

And then we can fall into the same cognitive trap because we generalise from a single, bad experience and the lesson our ChimpOS stores for future reference is “change is bad”.

And forever afterwards we feel anxious whenever the idea of change is suggested.

It is a very effective survival tactic – for a hominid living on the African savanna 10 million years ago, and at risk of falling prey to sharp-fanged, hungry predators. It is a less useful tactic in the modern world where the risk of being eaten-for-lunch is minimal, and where the pace of change is accelerating. We must learn to innovate and improve to survive in the social jungle … and we are not well equipped!

Here is another common cognitive trap:

Excellence implies no failures.

Yes. If we are delivering a consistently excellent service then the absence of failures will be a noticeable feature.

No failures implies excellence.

This is not a valid inference. If quality-of-service is measured on a continuum from Excrement-to-Excellent, then we can be delivering a consistently mediocre service, one that is barely adequate, and also have no failures.

The design flaw here is that our ChimpWare/ChimpOS memory system is lossy.

We do not remember all the information required to reconstruct an accurate memory of reality – because there is too much information. So we distort, we delete and we generalise. And we do that because when we evolved it was a good enough solution, and it enabled us to survive as a species, so the ChimpWare/ChimpOS genes were passed on.

We cannot reverse millions of years of evolution. We cannot get a wetware or a software upgrade. We need to learn to manage with the limitations of what we have between our ears.

And to avoid the cognitive traps we need to practice the discipline of bringing our unconscious assumptions up to conscious awareness … and we do that by asking carefully framed questions.

Here is another example:

A high-efficiency design implies high-utilisation of resources.

Yes, that is valid. Idle resources means wasted resources which means lower efficiency.

Q1: Is the converse also valid?

Q2: Is there any evidence that disproves the converse is valid?

If high-utilisation does not imply high-efficiency, what are the implications of falling into this cognitive trap? What is the value of measuring utilisation? Does it have a value?

These are useful questions.