This is the now-infamous statement that Donald Rumsfeld made at a Pentagon Press Conference which triggered some good-natured jesting from the assembled journalists.

But there is a problem with it.

There is a fourth combination that he does not mention: the Unknown-Knowns.

Which is a shame because they are actually the most important because they cause the most problems. Avoidable problems.

Suppose there is a piece of knowledge that someone knows but that someone else does not; then we have an unknown-known.

None of us know everything and we do not need to, because knowledge that is of no value to us is irrelevant for us.

But what happens when the unknown-known is of value to us, and more than that; what happens when it would be reasonable for someone else to expect us to know it; because it is our job to know.

A surgeon would be not expected to know a lot about astronomy, but they would be expected to know a lot about anatomy.

So, what happens if we become aware that we are missing an important piece of knowledge that is actually already known? What is our normal human reaction to that discovery?

Typically, our first reaction is fear-driven and we express defensive behaviour. This is because we fear the potential loss-of-face from being exposed as inept.

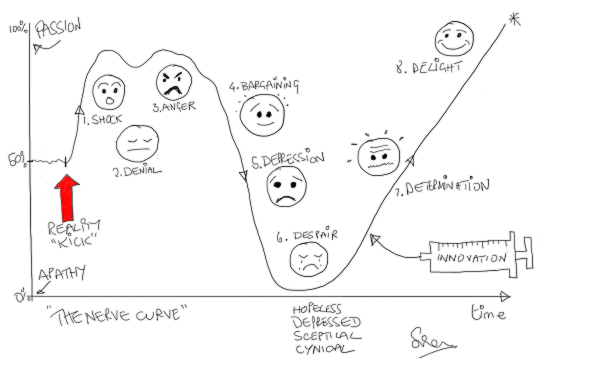

From this sudden shock we then enter a characteristic emotional pattern which is called the Nerve Curve.

After the shock of discovery we quickly flip into denial and, if that does not work then to anger (i.e. blame). We ignore the message and if that does not work we shoot the messenger.

After the shock of discovery we quickly flip into denial and, if that does not work then to anger (i.e. blame). We ignore the message and if that does not work we shoot the messenger.

And when in this emotionally charged state, our rationality tends to take a back seat. So, if we want to benefit from the discovery of an unknown-known, then we have to learn to bite-our-lip, wait, let the red mist dissipate, and then re-examine the available evidence with a cool, curious, open mind. A state of mind that is receptive and open to learning.

Recently, I was reminded of this.

The context is health care improvement, and I was using a systems engineering framework to conduct some diagnostic data analysis.

My first task was to run a data-completeness-verification-test … and the data I had been sent did not pass the test. There was some missing. It was an error of omission (EOO) and they are the hardest ones to spot. Hence the need for the verification test.

The cause of the EOO was an unknown-known in the department that holds the keys to the data warehouse. And I have come across this EOO before, so I was not surprised.

Hence the need for the verification test.

I was not annoyed either. I just fed back the results of the test, explained what the issue was, explained the cause, and they listened and learned.

The implication of this specific EOO is quite profound though because it appears to be ubiquitous across the NHS.

To be specific it relates to the precise details of how raw data on demand, activity, length of stay and bed occupancy is extracted from the NHS data warehouses.

So it is rather relevant to just about everything the NHS does!

And the error-of-omission leads to confusion at best; and at worst … to the following sequence … incomplete data => invalid analysis => incorrect conclusion => poor decision => counter-productive action => unintended outcome.

Does that sound at all familiar?

So, if would you like to learn about this valuable unknown-known is then I recommend the narrative by Dr Kate Silvester, an internationally recognised expert in healthcare improvement. In it, Kate re-tells the story of her emotional roller-coaster ride when she discovered she was making the same error.

Here is the link to the full abstract and where you can download and read the full text of Kate’s excellent essay, and help to make it a known-known.

That is what system-wide improvement requires – sharing the knowledge.