There is a widely held belief that competition is the only way to achieve improvement.

There is a widely held belief that competition is the only way to achieve improvement.

This is a limiting belief.

But our experience tells us that competition is an essential part of improvement!

So which is correct?

When two athletes compete they both have to train hard to improve their individual performance. The winner of the race is the one who improves the most. So by competing with each other they are forced to improve.

The goal of improvement is excellence and the test-of-excellence is performed in the present and is done by competing with others. The most excellent is labelled the “best” or “winner”. Everyone else is branded “second best” or “loser”.

This is where we start to see the limiting belief of competition.

It has a crippling effect. Many competitive people will not even attempt the race if they do not feel they can win. Their limiting belief makes them too fearful. They fear loss of self-esteem. Their ego is too fragile. They value hubris more than humility. And by not taking part they abdicate any opportunity to improve. They remain arrogantly mediocre and blissfully ignorant of it. They are the real losers.

So how can we keep the positive effect of competition and at the same time escape the limiting belief?

There are two ways:

First we drop the assumption that the only valid test of excellence is a comparison of us with others in the present. And instead we adopt the assumption that it is equally valid to compare us with ourselves in the past.

We can all improve compared with what we used to be. We can all be winners of that race.

And as improvement happens our perspective shifts. What becomes normal in the present would have been assumed to be impossible in the past.

This week I sat at my desk in a state of wonder.

I held in my hand a small plastic widget about the size of the end of my thumb. It was a new USB data stick that had just arrived, courtesy of Amazon, and on one side in small white letters it proudly announced that it could hold 64 Gigabytes of data (that is 64 x 1024 x 1024 x 1024). And it cost less than a take-away curry.

About 30 years ago, when I first started to learn how to design, build and program computer system, a memory chip that was about the same size and same cost could hold 4 kilobytes (4 x 1024).

So in just 30 years we have seen a 16-million-fold increase in data storage capacity. That is astounding! Our collective knowledge of how to design and build memory chips has improved so much. And yet we take it for granted.

The second way to side-step the limiting belief is even more powerful.

It is to drop the belief that individual improvement is enough.

Collective improvement is much, much, much more effective.

The human body is made up of about 50 trillion (50 x 1000 x 1000 x 1000 x 1000) cells – about the same as the number of bytes could store on 1000 of my wonderful new 64 Gigabyte data sticks!

And each cell is a microscopic living individual. A nano-engineered adaptive system of wondrous complexity and elegance.

Each cell breathes, eats, grows, moves, reproduces, senses, learns and remembers. These cells are really smart too! And they talk to each other, and they learn from each other.

And what makes the human possible is that its community of 50 trillion smart cells are a collaborative community … not a competitive community.

If all our cells started to compete with each other we would be very quickly reduced to soup (which is what the Earth was bathed in for about 2.7 billions years).

The first multi-celled organisms gained a massive survival advantage when they learned how to collaborate.

The rest is the Story of Evolution. Even Charles Darwin missed the point – evolution is more about collaboration than competition – and we are only now beginning to learn that lesson. The hard way.

So survival is about learning and improving.

So survival is about learning and improving.

And survival of the fittest does not mean the fittest individual … it means the fittest group.

Collaborative improvement is the process through which we can all achieve win-win-win excellence.

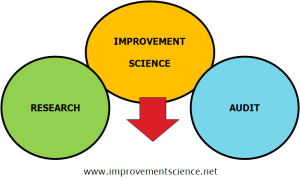

And the understanding of how to do this collaborative improvement has a name … it is called Improvement Science.