It is often assumed that if you combine world-class individuals into a team you will get a world-class team.

It is often assumed that if you combine world-class individuals into a team you will get a world-class team.

Meredith Belbin showed 30 years ago that you do not and it was a big shock at the time!

So, if world class individuals are not enough, what are the necessary and sufficient conditions for a world-class team?

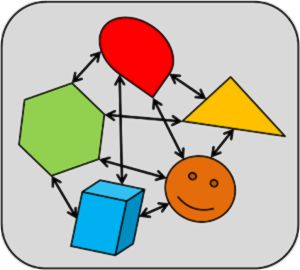

The late Russell Ackoff described it perfectly – he said that if you take the best parts of all the available cars and put them together you do not get the best car – you do not even get a car. The parts are necessary but they are not sufficient – how the parts connect to each other and how they influence each other is more important. These interdependencies are part of the system – and to understand a system requires understanding both the parts and their relationships.

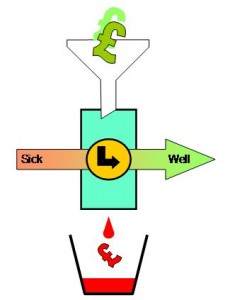

A car is a mechanical system; the human body is a biological system; and a team is a social system. So to create a high performance, healthy, world class team requires that both the individuals and their relationships with each other are aligned and resonant.

When the parts are aligned we get more than the sum of the parts; and when they are not we get less.

If we were to define intelligence quotient as “an ability to understand and solve novel problems” then the capability of a team to solve novel problems is the collective intelligence. Experience suggests that a group can appear to be less intelligent than any of the individual members. The problem here is with the relationships between the parts – and the term that is often applied is “dysfunctional”.

The root cause is almost always distrustful attitudes which lead from disrespectful prejudices and that lead to discounting behaviour. We learn these prejudices, attitudes and behaviours from each other and we reinforce them with years of practice. But if they are learned then they can be un-learned. It is simple in theory, and it is possible in practice, but it is not easy.

So if we want to (dis)solve complex, novel problems thenwe need world-class problem solving teams; and to transform our 3rd class dysfunctional teams we must first learn to challenge respectfully our disrespectful behaviour.

The elephant is in the room!