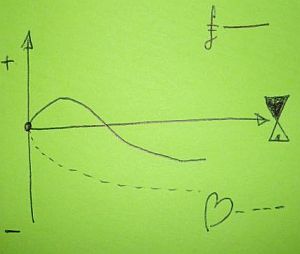

There seem to be two extremes to building the momentum for improvement – One Big Whack or Many Small Nudges.

The One Big Whack can come at the start and is a shock tactic designed to generate an emotional flip – a Road to Damascus moment – one that people remember very clearly. This is the stuff that newspapers fall over themselves to find – the Big Front Page Story – because it is emotive so it sells newspapers. The One Big Whack can also come later – as an act of desperation by those in power who originally broadcast The Big Idea and who are disappointed and frustrated by lack of measurable improvement as the time ticks by and the money is consumed.

The One Big Whack can come at the start and is a shock tactic designed to generate an emotional flip – a Road to Damascus moment – one that people remember very clearly. This is the stuff that newspapers fall over themselves to find – the Big Front Page Story – because it is emotive so it sells newspapers. The One Big Whack can also come later – as an act of desperation by those in power who originally broadcast The Big Idea and who are disappointed and frustrated by lack of measurable improvement as the time ticks by and the money is consumed.

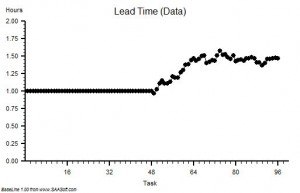

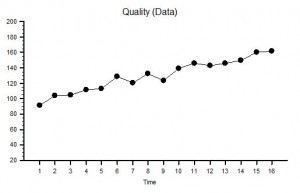

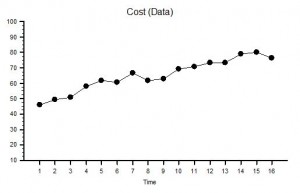

Many Small Nudges do not generate a big emotional impact; they are unthreatening; they go almost unnoticed; they do not sell newspapers, and they accumulate over time. The surprise comes when those in power are delighted to discover that significant improvement has been achieved at almost no cost and with no cajoling.

So how is the Many Small Nudge method implemented?

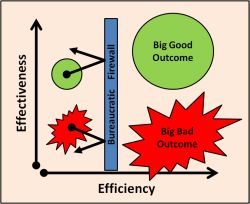

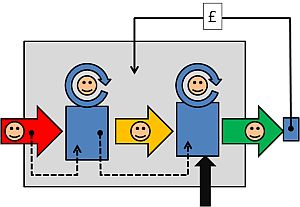

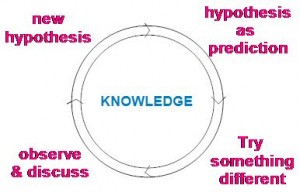

The essential element is The Purpose – and this must not be confused with A Process. The Purpose is what is intended; A Process is how it is achieved. And answering the “What is my/our purpose?” question is surprisingly difficult to do.

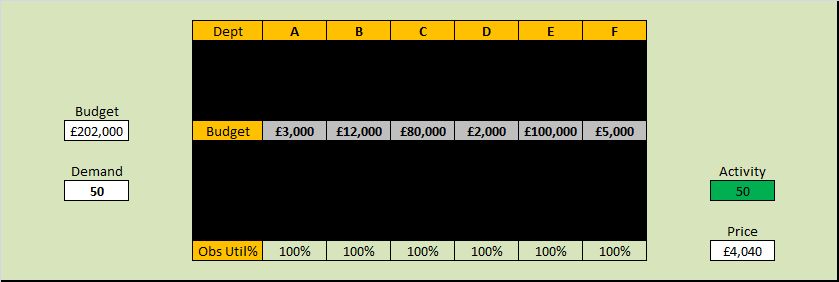

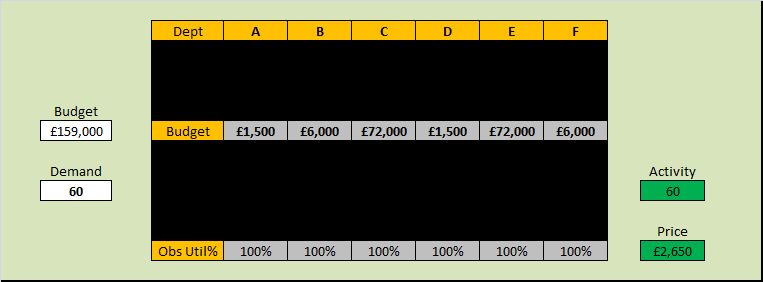

For example I often ask doctors “What is our purpose?” The first reaction is usually “What a dumb question – it is obvious”. “OK – so if it is obvious can you describe it?” The reply is usually “Well, err, um, I suppose, um – ah yes – our purpose is to heal the sick!” “OK – so if that is our purpose how well are we doing?” Embarrassed silence. We do not know because we do not all measure our outcomes as a matter of course. We measure activity and utilisation – which are measures of our process not of our purpose – and we justify not measuring outcome by being too busy – measuring activity and utilisation.

For example I often ask doctors “What is our purpose?” The first reaction is usually “What a dumb question – it is obvious”. “OK – so if it is obvious can you describe it?” The reply is usually “Well, err, um, I suppose, um – ah yes – our purpose is to heal the sick!” “OK – so if that is our purpose how well are we doing?” Embarrassed silence. We do not know because we do not all measure our outcomes as a matter of course. We measure activity and utilisation – which are measures of our process not of our purpose – and we justify not measuring outcome by being too busy – measuring activity and utilisation.

Sometimes I ask the purpose question a different way. There is a Latin phrase that is often used in medicine: primum non nocere which means “First do no harm”. So I ask – “Is that our purpose?”. The reply is usually something like “No but safety is more important than efficiency!” “OK – safety and efficiency are both important but are they our purpose?”. It is not an easy question to answer.

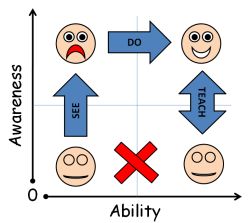

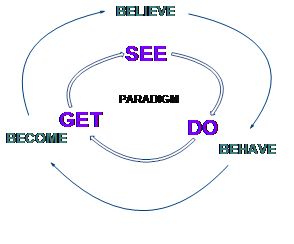

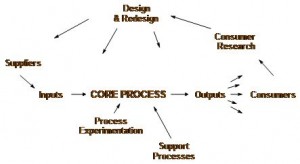

A Process can be designed – because it has to obey the Laws of Physics. The Purpose relates to People not to Physics – so we cannot design The Purpose, we can only design a process to achieve The Purpose. We can define The Purpose though – and in so doing we achieve clarity of purpose. For a healthcare organisation a possible Clear Statement of Purpose might be “WE want a system that protects, improves and restores health“.

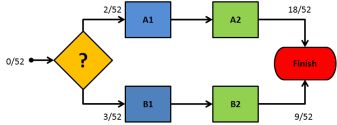

Purpose statements state what we want to have. They do not state what we want to do, to not do or to not have. This may seem like a splitting hairs but it is important because the Statement of Purpose is key to the Many Small Nudges approach.

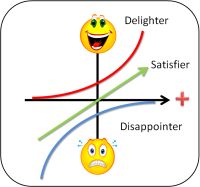

Whenever we have a decision to make we can ask “How will this decision contribute to The Purpose?”. If an option would move us in the direction of The Purpose then it gets a higher ranking to a choice that would steer us away from The Purpose. There is only one On Purpose direction and many Off Purpose ones – and this insight explains why avoiding what we do not want (i.e. harm) is not the same as achieving what we do want. We can avoid doing harm and yet not achieve health and be very busy all at the same time.

Leaders often assume that it is their job to define The Purpose for their Organisation – to create the Vision Statement, or the Mission Statement. Experience suggests that clarifying the existing but unspoken purpose is all that is needed – just by asking one little question – “What is our purpose?” – and asking it often and of everyone – and not being satisfied with a “process” answer.

Leaders often assume that it is their job to define The Purpose for their Organisation – to create the Vision Statement, or the Mission Statement. Experience suggests that clarifying the existing but unspoken purpose is all that is needed – just by asking one little question – “What is our purpose?” – and asking it often and of everyone – and not being satisfied with a “process” answer.