It is a common and intuitively reasonable assumption to believe that if something is explainable then it is predictable; and if it is not explainable then it is not predictable. Unfortunately this beguiling assumption is incorrect. Some things are explainable but not predictable; and some others are predictable but not explainable. Believe me? Of course not. We are all skeptics when our intuitively obvious assumptions and conclusions are challenged! We want real and rational evidence not rhetorical exhortation.

It is a common and intuitively reasonable assumption to believe that if something is explainable then it is predictable; and if it is not explainable then it is not predictable. Unfortunately this beguiling assumption is incorrect. Some things are explainable but not predictable; and some others are predictable but not explainable. Believe me? Of course not. We are all skeptics when our intuitively obvious assumptions and conclusions are challenged! We want real and rational evidence not rhetorical exhortation.

OK. Explainable means that the principles that guide the process are conceptually simple. We can explain the parts in detail and we can explain how they are connected together in detail. Predictable implies that if we know the starting point in detail, and the intervention in detail, then we can predict what the outcome will be – in detail.

Let us consider an example. Say we know how much we have in our bank account, and we know how much we intend to spend on that new whizzo computer, then we can predict what will be left in out bank account when the payment has been processed. Yes. This is an explainable and predictable system. It is called a linear system.

Let us consider another example. Say we know we have six dice each with numbers 1 to 6 printed on them and we throw them at the same time. Can we predict where they will land and what the final sum will be? No. We can say that it will be between 6 and 36 but that is all. And after we have thrown the dice we will not be able to explain, in detail, how they came to rest exactly where they did. This is an unpredictable and unexplainable system. It is called a random system.

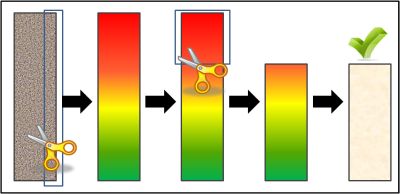

This is a picture of a conceptually simple system. It is a novelty toy and it comprises two thin sheets of glass held a few millimetres apart by some curved plastic spacers. The narrow space is filled with green coloured oil, some coarse black volcanic sand, and some fine white coral sand. That is all. It is a conceptually simple toy. I have (by some magical means) layered the sand so that the coarse black sand is at the bottom and the fine white sand is on top. It is stable arrangement – and explainable. I then tipped the toy on its side – I rotated it through 90 degrees. It is a simple intervention – and explainable.

My intervention has converted a stable system to an unstable one and I confidently predict that the sand and oil will flow under the influence of gravity. There is no randomness here – I do not jiggle the toy – so the outcome should be predictable because I can explain all the parts in detail before we start; and I can explain the process in detail; and I can explain precisely what my intervention will be. So I should be able to predict the final configuration of the sand when this simple and explainable system finally settles into a new stable state again. Yes?

Well, I cannot. I can make some educated guesses – some plausible projections. But the only way to find out precisely what will happen is by doing the experiment and observing what actually happens.

This is what happened.

The final, stable configuration of the coarse black and fine white sand has a strange beauty in the way the layers are re-arranged. The result is not random – it has structure. And with the benefit of hindsight I feel I can work backwards and understand how it might have come about. It is explainable in retrospect but I could not predict it in prospect – even with a detailed knowledge of the starting point and the process.

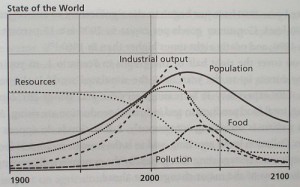

This is called a non-linear system. Explainable in concept but difficult to predict in practice. The weather is another example of a non-linear system – explainable in terms of the physics but not precisely predictable. How reliable are our long range weather forecasts – or the short range ones for that matter?

Non-linear systems exhibit complex and unpredictable behaviour – even though they may be simple in concept and uncomplicated in construction. Randomness is usually present in real systems but it is not the cause of the complex behaviour, and making our systems more complicated seems likely to result in more unpredictable behaviour – not less.

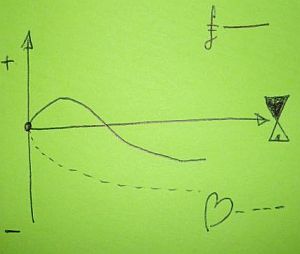

If we want the behaviour of our system to be predictable and our system has non-linear parts and relationships in it – then we are forced to accept two Universal Truths.

1. That our system behaviour will only be predictable within limits (even if there is little or no randomness in it).

2. That to keep the behaviour within acceptable limits then we need to be careful how we arrange the parts and how they relate to each other.

This challenge of creating a predictable-within-acceptable-limits system from non-linear parts is called resilient design.

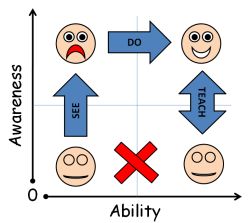

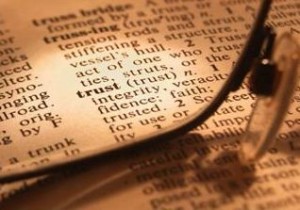

We have a fourth option to consider: a system that has a predictable outcome but an unexplainable reason.

We make predictions two ways – by working out what will happen or by remembering what has happened before. The second method is much easier so it is the one we use most of the time: it is called re-cognition. We call it knowledge.

If we have a black box with inputs on one side and outputs on the other, and we observe that when we set the inputs to a specific configuration we always get the same output – then we have a predicable system. We cannot explain how the inputs result in the output because the inner workings are hidden. It could be very simple – or it could be fiendishly complicated – we do not know.

It this situation we have no choice but to accept the status quo – and we have to accept that to get a predictable outcome we have to follow the rules and just do what we have always done before. It is the creed of blind acceptance – the If you always do what you have always done you will always get what you always got. It is knowledge but it is not understanding. New knowledge can only be found by trial and error. It is not wisdom, it is not design, it is not curiosity and it is not Improvement Science.

If our systems are non-linear (which they are) and we want predictable and acceptable performance (which we do) then we must strive to understand them and then to design them to be as simple as possible (which is difficult) so that we have the greatest opportunity to improve their performance by design (which is called Improvement Science).

This is a snapshot of the evolving oil-and-sand system. Look at that weird wine-glass shaped hole in the top section caused by the black sand being pulled down through the gap in the spacer then running down the slope of the middle section to fill a white sand funnel and then slip through the next hole onto the top of the white sand pyramid created by the white sand in the middle section that slipped through earlier onto the top of the sliding sand in the lowest section. Did you predict that? I suspect not. Me neither. But I can explain it – with the benefit of hindsight.

This is a snapshot of the evolving oil-and-sand system. Look at that weird wine-glass shaped hole in the top section caused by the black sand being pulled down through the gap in the spacer then running down the slope of the middle section to fill a white sand funnel and then slip through the next hole onto the top of the white sand pyramid created by the white sand in the middle section that slipped through earlier onto the top of the sliding sand in the lowest section. Did you predict that? I suspect not. Me neither. But I can explain it – with the benefit of hindsight.

So what is it that is causing this complex behaviour? It is the spacers – the physical constraints to the flow of the sand and oil. And the same is true of systems – when the process hits a constraint then the behaviour suddenly changes and complex behaviour emerges. And there is more to it than even this. It is the gaps between the spacers that is creating the complex behaviour. The flow from one compartment leaking into the next and influencing its behaviour, and then into the next. This is what happens in all systems – the more constraints that are added to force the behaviour into predictable channels, and the more gaps that exist in the system of constraints then the more complex and unpredictable the system behaviour becomes. Which is exactly the opposite of the intended outcome.

The lesson that this simple toy can teach us is that if we want stable and predictable (i.e. non-complex) behaviour from our complicated systems then we must design them to operate inside the constraints so that they just never quite touch them. That requires data, information, knowledge, understanding and wise design. That is called Improvement Science.

But if, in an act of desperation, we force constraints onto the system we will make the system less stable, less predictable, less safe, less productive, less enjoyable and less affordable. That is called tampering.