“Primum non nocere” is Latin for “First do no harm”.

“Primum non nocere” is Latin for “First do no harm”.

It is a warning mantra that had been repeated by doctors for thousands of years and for good reason.

Doctors can be bad for your health.

I am not referring to the rare case where the doctor deliberately causes harm. Such people are criminals and deserve to be in prison.

I am referring to the much more frequent situation where the doctor has no intention to cause harm – but harm is the outcome anyway.

Very often the risk of harm is unavoidable. Healthcare is a high risk business. Seriously unwell patients can be very unstable and very unpredictable. Heroic efforts to do whatever can be done can result in unintended harm and we have to accept those risks. It is the nature of the work. Much of the judgement in healthcare is balancing benefit with risk on a patient by patient basis. It is not an exact science. It requires wisdom, judgement, training and experience. It feels more like an art than a science.

The focus of this essay is not the above. It is on unintentionally causing avoidable harm.

Or rather unintentionally not preventing avoidable harm which is not quite the same thing.

Safety means prevention of avoidable harm. A safe system is one that does that. There is no evidence of harm to collect. A safe system does not cause harm. Never events never happen.

Safe systems are designed to be safe. The root causes of harm are deliberately designed out one way or another. But it is not always easy because to do that we need to understand the cause-and-effect relationships that lead to unintended harm. Very often we do not.

In 1847 a doctor called Ignaz Semmelweis made a very important discovery. He discovered that if the doctors and medical students washed their hands in disinfectant when they entered the labour ward, then the number of mothers and babies who died from infection was reduced.

And the number dropped a lot.

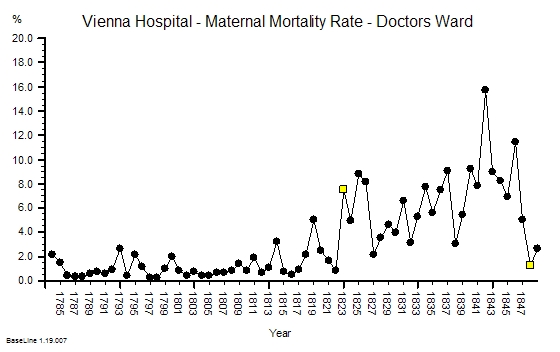

It fell from an annual average of 10% to less than 2%! In really bad months the rate was 30%.

The chart below shows the actual data plotted as a time-series chart. The yellow flag in 1848 is just after Semmelweis enforced a standard practice of hand-washing.

Semmelweis did not know the mechanism though. This was not a carefully designed randomised controlled trial (RCT). He was desperate. And he was desperate because this horrendous waste of young lives was only happening on the doctors ward. On the nurses ward, which was just across the corridor, the maternal mortality was less than 2%.

The hospital authorities explained it away as ‘bad air’ from outside. That was the prevailing belief at the time. Unavoidable. A risk that had to be just accepted.

Semmeleis could not do a randomized controlled trial because they were not invented until a century later.

And Semmelweis suspected that the difference between the mortality on the nurses and the doctors wards was something to do with the Mortuary. Only the doctors performed the post-mortems and the practice of teaching anatomy to medical students using post-mortem dissection was an innovation pioneered in Vienna in 1823 (the first yellow flag on the chart above). But Semmelweis did not have this data in 1847. He collated it later and did not publish it until 1861.

What Semmelweis demonstrated was the unintended and avoidable deaths were caused by ignorance of the mechanism of how microorganisms cause disease. We know that now. He did not.

It would be another 20 years before Louis Pasteur demonstrated the mechanism using the famous experiment with the swan neck flask. Pasteur did not discover microorganisms; he proved that they did not appear spontaneously in decaying matter as was believed. He proved that by killing the bugs by boiling, the broth in the flask stayed fresh even though it was exposed to the air. That was a big shock but it was a simple and repeatable experiment. He had a mechanism. He was believed. Germ theory was born. A Scottish surgeon called Joseph Lister read of this discovery and surgical antisepsis was born.

Semmelweis suspected that some ‘agent’ may have been unwittingly transported from the dead bodies to the live mothers and babies on the hands of the doctors. It was a deeply shocking suggestion that the doctors were unwittingly killing their patients.

The other doctors did not take this suggestion well. Not well at all. They went into denial. They discounted the message and they discharged the messenger. Semmelweis never worked in Vienna again. He went back to Hungary and repeated the experiment. It worked.

Even today the message that healthcare practitioners can unwittingly bring avoidable harm to their patients is disturbing. We still seek solace in denial.

Hospital acquired infections (HAI) are a common cause of harm and many are avoidable using simple, cheap and effective measures such as hand-washing.

The harm does not come from what we do. It comes from what we do not do. It happens when we omit to follow the simple safety measures that have be proven to work. Scientifically. Statistically Significantly. Understood and avoidable errors of omission.

So how is this “statistically significant scientific proof” acquired?

By doing experiments. Just like the one Ignaz Semmelweis conducted. But the improvement he showed was so large that it did not need statistical analysis to validate it. And anyway such analysis tools were not available in 1847. If they had been he might have had more success influencing his peers. And if he had achieved that goal then thousands, if not millions, of deaths from hospital acquired infections may have been prevented. With the clarity of hindsight we now know this harm was avoidable.

No. The problem we have now is because the improvement that follows a single intervention is not very large. And when the causal mechanisms are multi-factorial we need more than one intervention to achieve the improvement we want. The big reduction in avoidable harm. How do we do that scientifically and safely?

About 20% of hospital acquired infections occur after surgical operations.

We have learned much since 1847 and we have designed much safer surgical systems and processes. Joseph Lister ushered in the era of safe surgery, much has happened since.

We routinely use carefully designed, ultra-clean operating theatres, sterilized surgical instruments, gloves and gowns, and aseptic techniques – all to reduce bacterial contamination from outside.

But surgical site infections (SSIs) are still common place. Studies show that 5% of patients on average will suffer this complication. Some procedures are much higher risk than others, despite the precautions we take. And many surgeons assume that this risk must just be accepted.

Others have tried to understand the mechanism of SSI and their research shows that the source of the infections is the patients themselves. We all carry a ‘bacterial flora’ and normally that is no problem. Our natural defense – our skin – is enough. But when that biological barrier is deliberately breached during a surgical operation then we have a problem. The bugs get in and cause mischief. They cause surgical site infections.

So we have done more research to test interventions to prevent this harm. Each intervention has been subject to well-designed, carefully-conducted, statistically-valid and very expensive randomized controlled trials. And the results are often equivocal. So we repeat the trials – bigger, better controlled trials. But the effects of the individual interventions are small and they easily get lost in the noise. So we pool the results of many RCTs in what is called a ‘meta-analysis’ and the answer from that is very often ‘not proven’ – either way. So individual surgeons are left to make the judgement call and not surprisingly there is wide variation in practice. So is this the best that medical science can do?

No. There is another way. What we can do is pool all the learning from all the trials and design a multi-facetted intervention. A bundle of care. And the idea of a bundle is that the separate small effects will add or even synergise to create one big effect. We are not so much interested in the mechanism as the outcome. Just like Ignaz Semmelweiss.

And we can now do something else. We can test our bundle of care using statistically robust tools that do not require a RCT. They are just as statistically valid as a RCT but a different design.

And the appropriate tool for this to measure the time interval between adverse the events – and then to plot this continuous metric as a time-series chart.

But we must be disciplined. First we must establish the baseline average interval and then we introduce our bundle and then we just keep measuring the intervals.

If our bundle works then the interval between the adverse events gets longer – and we can easily prove that using our time-series chart. The longer the interval the more ‘proof’ we have. In fact we can even predict how long we need to observe to prove that ‘no events’ is a statistically significant improvement. That is an elegant an efficient design.

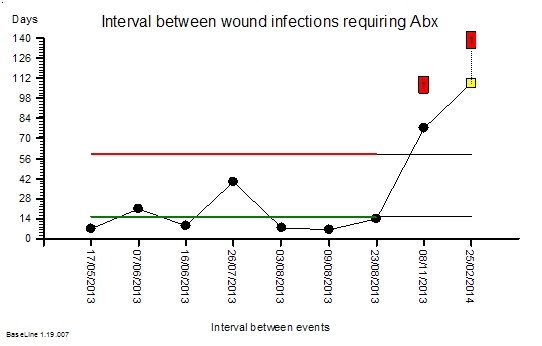

Here is a real and recent example.

The time-series chart below shows the interval in days between surgical site infections following routine hernia surgery. These are not life threatening complications. They rarely require re-admission or re-operation. But they are disruptive for patients. They cause pain, require treatment with antibiotics, and the delay recovery and return to normal activities. So we would like to avoid them if possible.

The green and red lines show the baseline period. The green line says that the average interval between SSIs is 14 days. The red line says that an interval more than about 60 days would be surprisingly long: valid statistical evidence of an improvement. The end of the green and red lines indicates when the intervention was made: when the evidence-based designer care bundle was adopted together with the discipline of applying it to every patient. No judgement. No variation.

The chart tells the story. No complicated statistical analysis is required. It shows a statistically significant improvement. And the SSI rate fell by over 80%. That is a big improvement.

We still do not know how the care bundle works. We do not know which of the seven simultaneous simple and low-cost interventions we chose are the most important or even if they work independently or in synergy. Knowledge of the mechanism was not our goal.

Our goal was to improve outcomes for our patients – to reduce avoidable harm – and that has been achieved. The evidence is clear.

That is Improvement Science in action.

And to read the full account of this example of the Science of Improvement please go to:

http://www.journalofimprovementscience.org

It is essay number 18.

And avoid another error of omission. If you have read this far please share this message – it is important.