It is often assumed that “high quality costs more” and there is certainly ample evidence to support this assertion: dinner in a high quality restaurant commands a high price. The usual justifications for the assumption are (a) quality ingredients and quality skills cost more to provide; and (b) if people want a high quality product or service that is in relatively short supply then it commands a higher price – the Law of Supply and Demand. Together this creates a self-regulating system – it costs more to produce and so long as enough customers are prepared to pay the higher price the system works. So what is the problem? The problem is that the model is incorrect. The assumption is incorrect. Higher quality does not always cost more – it usually costs less. Convinced? No. Of course not. To be convinced we need hard, rational evidence that disproves our assumption. OK. Here is the evidence.

It is often assumed that “high quality costs more” and there is certainly ample evidence to support this assertion: dinner in a high quality restaurant commands a high price. The usual justifications for the assumption are (a) quality ingredients and quality skills cost more to provide; and (b) if people want a high quality product or service that is in relatively short supply then it commands a higher price – the Law of Supply and Demand. Together this creates a self-regulating system – it costs more to produce and so long as enough customers are prepared to pay the higher price the system works. So what is the problem? The problem is that the model is incorrect. The assumption is incorrect. Higher quality does not always cost more – it usually costs less. Convinced? No. Of course not. To be convinced we need hard, rational evidence that disproves our assumption. OK. Here is the evidence.

Suppose we have a simple process that has been designed to deliver the Perfect Service – 100% quality, on time, first time and every time – 100% dependable and 100% predictable. We choose a Service for our example because the product is intangible and we cannot store it in a warehouse – so it must be produced as it is consumed.

To measure the Cost of Quality we first need to work out the minimum price we would need to charge to stay in business – the sum of all our costs divided by the number we produce: our Minimum Viable Price. When we examine our Perfect Service we find that it has three parts – Part 1 is the administrative work: receiving customers; scheduling the work; arranging for the necessary resources to be available; collecting the payment; having meetings; writing reports and so on. The list of expenses seems endless. It is the necessary work of management – but it is not what adds value for the customer. Part 3 is the work that actually adds the value – it is the part the customer wants – the Service that they are prepared to pay for. So what is Part 2 work? This is where our customers wait for their value – the queue. Each of the three parts will consume resources either directly or indirectly – each has a cost – and we want Part 3 to represent most of the cost; Part 2 the least and Part 1 somewhere in between. That feels realistic and reasonable. And in our Perfect Service there is no delay between the arrival of a customer and starting the value work; so there is no queue; so no work in progress waiting to start, so the cost of Part 2 is zero.

The second step is to work out the cost of our Perfect Service – and we could use algebra and equations to do that but we won’t because the language of abstract mathematics excludes too many people from the conversation – let us just pick some realistic numbers to play with and see what we discover. Let us assume Part 1 requires a total of 30 mins of work that uses resources which cost £12 per hour; and let us assume Part 3 requires 30 mins of work that uses resources which cost £60 per hour; and let us assume Part 2 uses resources that cost £6 per hour (if we were to need them). We can now work out the Minimum Viable Price for our Perfect Service:

Part 1 work: 30 mins @ £12 per hour = £6

Part 2 work: = £0

Part 3 work: 30 mins at £60 per hour = £30

Total: £36 per customer.

Our Perfect Service has been designed to deliver at the rate of demand which is one job every 30 mins and this means that the Part 1 and Part 3 resources are working continuously at 100% utilisation. There is no waste, no waiting, and no wobble. This is our Perfect Service and £36 per job is our Minimum Viable Price.

The third step is to tarnish our Perfect Service to make it more realistic – and then to do whatever is necessary to counter the necessary imperfections so that we still produce 100% quality. To the outside world the quality of the service has not changed but it is no longer perfect – they need to wait a bit longer, and they may need to pay a bit more. Quality costs remember! The question is – how much longer and how much more? If we can work that out and compare it with our Minimim Viable Price we will get a measure of the Cost of Reality.

We know that variation is always present in real systems – so let the first Dose of Reality be the variation in the time it takes to do the value work. What effect does this have? This apparently simple question is surprisingly difficult to answer in our heads – and we have chosen not to use “scarymatics” so let us run an empirical experiment and see what happens. We could do that with the real system, or we could do it on a model of the system. As our Perfect Service is so simple we can use a model. There are lots of ways to do this simulation and the technique used in this example is called discrete event simulation (DES) and I used a process simulation tool called CPS (www.SAASoft.com).

Let us see what happens when we add some random variation to the time it takes to do the Part 3 value work – the flow will not change, the average time will not change, we will just add some random noise – but not too much – something realistic like 10% say.

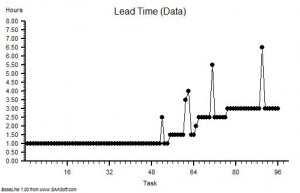

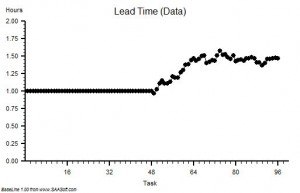

The chart shows the time from start to finish for each customer and to see the impact of adding the variation the first 48 customers are served by our Perfect Service and then we switch to the Realistic Service. See what happens – the time in the process increases then sort of stabilises. This means we must have created a queue (i.e. Part 2 work) and that will require space to store and capacity to clear. When we get the costs in we work out our new minimum viable price it comes out, in this case, to be £43.42 per task. That is an increase of over 20% and it gives us a measure of the Cost of the Variation. If we repeat the exercise many times we get a similar answer, not the same every time because the variation is random, but it is always an extra cost. It is never less that the perfect proce and it does not average out to zero. This may sound counter-intuitive until we understand the reason: when we add variation we need a bit of a queue to ensure there is always work for Part 3 to do; and that queue will form spontaneously when customers take longer than average. If there is no queue and a customer requires less than average time then the Part 3 resource will be idle for some of the time. That idle time cannot be stored and used later: time is not money. So what happens is that a queue forms spontaneously, so long as there is space for it, and it ensures there is always just enough work waiting to be done. It is a self-regulating system – the queue is called a buffer.

The chart shows the time from start to finish for each customer and to see the impact of adding the variation the first 48 customers are served by our Perfect Service and then we switch to the Realistic Service. See what happens – the time in the process increases then sort of stabilises. This means we must have created a queue (i.e. Part 2 work) and that will require space to store and capacity to clear. When we get the costs in we work out our new minimum viable price it comes out, in this case, to be £43.42 per task. That is an increase of over 20% and it gives us a measure of the Cost of the Variation. If we repeat the exercise many times we get a similar answer, not the same every time because the variation is random, but it is always an extra cost. It is never less that the perfect proce and it does not average out to zero. This may sound counter-intuitive until we understand the reason: when we add variation we need a bit of a queue to ensure there is always work for Part 3 to do; and that queue will form spontaneously when customers take longer than average. If there is no queue and a customer requires less than average time then the Part 3 resource will be idle for some of the time. That idle time cannot be stored and used later: time is not money. So what happens is that a queue forms spontaneously, so long as there is space for it, and it ensures there is always just enough work waiting to be done. It is a self-regulating system – the queue is called a buffer.

Let us see what happens when we take our Perfect Process and add a different form of variation – random errors. To prevent the error leaving the system and affecting our output quality we will repeat the work. If the errors are random and rare then the chance of getting it wrong twice for the same customer will be small so the rework will be a rough measure of the internal process quality. For a fair comparison let us use the same degree of variation as before – 10% of the Part 3 have an error and need to be reworked – which in our example means work going to the back of the queue.

Again, to see the effect of the change, the first 48 tasks are from the Perfect System and after that we introduce a 10% chance of a task failing the quality standard and needing to be reworked: in this example 5 tasks failed, which is the expected rate. The effect on the start to finish time is very different from before – the time for the reworked tasks are clearly longer as we would expect, but the time for the other tasks gets longer too. It implies that a Part 2 queue is building up and after each error we can see that the queue grows – and after a delay. This is counter-intuitive. Why is this happening? It is because in our Perfect Service we had 100% utiliation – there was just enough capacity to do the work when it was done right-first-time, so if we make errors and we create extra demand and extra load, it will exceed our capacity; we have created a bottleneck and the queue will form and it will cointinue to grow as long as errors are made. This queue needs space to store and capacity to clear. How much though? Well, in this example, when we add up all these extra costs we get a new minimum price of £62.81 – that is a massive 74% increase! Wow! It looks like errors create much bigger problem for us than variation. There is another important learning point – random cycle-time variation is self-regulating and inherently stable; random errors are not self-regulating and they create inherently unstable processes.

Our empirical experiment has demonstrated three principles of process design for minimising the Cost of Reality:

1. Eliminate sources of errors by designing error-proofed right-first-time processes that prevent errors happening.

2. Ensure there is enough spare capacity at every stage to allow recovery from the inevitable random errors.

3. Ensure that all the steps can flow uninterrupted by allowing enough buffer space for the critical steps.

With these Three Principles of cost-effective design in mind we can now predict what will happen if we combine a not-for-profit process, with a rising demand, with a rising expectation, with a falling budget, and with an inspect-and-rework process design: we predict everyone will be unhappy. We will all be miserable because the only way to stay in budget is to cut the lower priority value work and reinvest the savings in the rising cost of checking and rework for the higher priority jobs. But we have a problem – our activity will fall, so our revenue will fall, and despite the cost cutting the budget still doesn’t balance because of the increasing cost of inspection and rework – and we enter the death spiral of finanical decline.

The only way to avoid this fatal financial tailspin is to replace the inspection-and-rework habit with a right-first-time design; before it is too late. And to do that we need to learn how to design and deliver right-first-time processes.

Charts created using BaseLine