Sickness, illness, ill health, unhealthy, disease, disorder, distress are all words that we use when how we feel falls short of how we expect to feel. The words impliy an illness continuum and each of us appeara to use different thresholds as action alerts.

Sickness, illness, ill health, unhealthy, disease, disorder, distress are all words that we use when how we feel falls short of how we expect to feel. The words impliy an illness continuum and each of us appeara to use different thresholds as action alerts.

The first is crossed when we become aware that all is not right and our response and to enter a self-diagnosis and self-treatment mindset. This threshold is context-dependent; we use external references to detect when we have strayed too far from the norm – we compare ourselves with others. This early warning system works most of the time – after all chemists make their main business from over the counter (OTC) remedies!

If the first stage does not work we cross the second threshold when we accept that we need expert assistance and we switch into a different mode of thinking – the “sick role”. Crossing the second threshold is a big psychological step that implies a perceived loss of control and power – and explains why many people put off seeking help. They enter a phase of denial, self-deception and self-justification which can be very resistant to change.

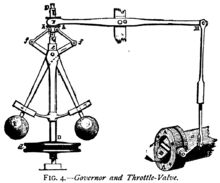

The same is true of organisations – when they become aware that they are performing below expectation then a “self-diagnosis” and “self-treatment” is instigated, except that it is called something different such as an “investigation” or “root cause analysis” and is followed by a “recommendations” and an “action plan”. The requirements for this to happen are an ability to become aware of a problem and a capability to understand and address the root cause both effectively and efficiently. This is called dynamic stability or “homeostasis” and is a feature of many systems. The image of a centrifugal governor is a good example – it was one of the critical innovations that allowed the power of steam to be harnessed safely a was a foundation stone of the industrial revolution. The design is called a negative feedback stabiliser and it has a drawback – there may be little or no external sign of the effort required to maintain the stability.

Problems arise when parts of this expectation-awareness-feedback-adjustment process are missing, do not work, or become disconnected. If there is an unclear expectation then it is impossible to know when and how to react. Not being clear what “healthy” means leads to confusion. It is too easy to create a distorted sense of normality by choosing a context where everyone is the same as you – “birds of a feather flock together”.

Another danger is to over-simplify the measure of health and to focus on one objective dimension – money – with the assumption that if the money is OK then the system must be OK. This is an error of logic because although a healthy system implies healthy finances, the reverse is not the case – a business can be both making money and heading for disaster.

Failure can also happen if the most useful health metrics are not measured, are measured badly, or are not communicated in a meaningful way. Very often metrics are not interpreted in context, not tracked over time, and not compared with the agreed expectation of health. These multiple errors of omission lead to conterproductive behaviour such as the use of delusional ratios and arbitrary targets (DRATs), short-termism and “chasing the numbers” – all of which can further erode the underlying health of the system – like termites silently eating the foundations of your house. By the time you notice it is too late – the foundations have crumbled into dust!

To achieve and maintain systemic health it is necessary to include the homeostatic mechanisms at the design stage. Trying to add or impose the feedback functions afterwards is less effective and less efficient. A healthy system is desoigned with sensitive feedback loops that indicate the effort required to maintain dynamic stablity – and if that effort is increasing then that alone is cause for further investigation – often long before the output goes out of specification. Healthy systems are economic and are designed to require a minimum of effort to maintain stability and sustain performance – good design feels effortless compared with poor design. A system that only detects and reacts to deviations in outputs is an inferior design – it is like driving by looking in the rear-view mirror!

Healthy systems were designed to be healthy from the start or have evolved from unhealthy ones – the books by Jim Collins describes this: “Built to Last” describes organisations that have endured because they were destined to be great from the start. “Good to Great” describes organisations that have evolved from unremarkable performers into great performers. There is a common theme to great companies irrespective of their genesis – data, information, knowledge, understanding and most important of all a wise leader.